Convolution For Mac

Performing Convolution Operations Convolution is a common image processing technique that changes the intensities of a pixel to reflect the intensities of the surrounding pixels. A common use of convolution is to create image filters. Using convolution, you can get popular image effects like blur, sharpen, and edge detection—effects used by applications such as Photo Booth, iPhoto, and Aperture. If you are interested in efficiently applying image filters to large or real-time image data, you will find the vImage functions useful. In terms of image filtering, vImage’s convolution operations can perform common image filter effects such as embossing, blurring, and, posterizing. VImage convolution operations can also be useful for sharpening or otherwise enhancing certain qualities of images.

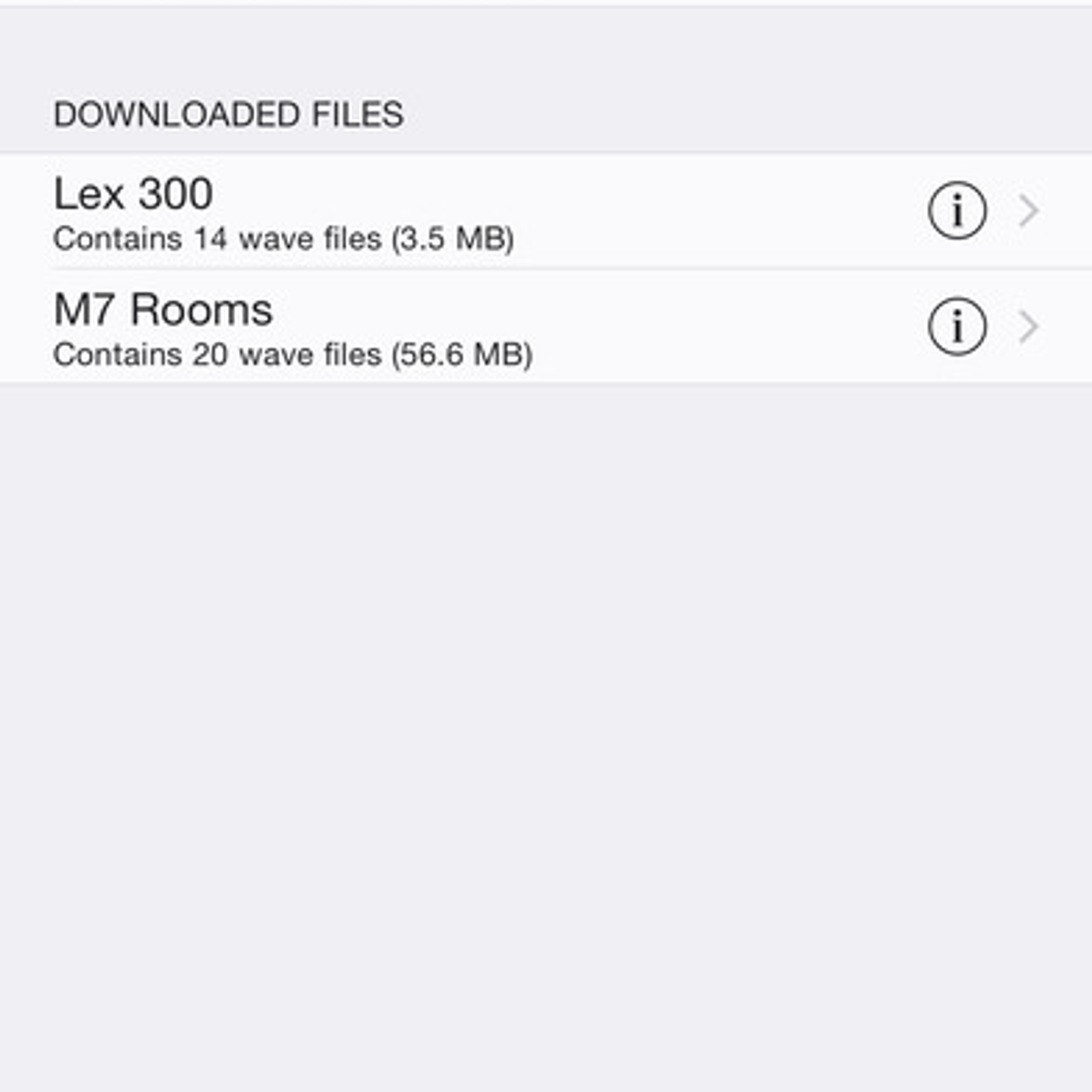

Dec 10, 2009 - What host are you running? If you're using Logic Pro, you've got Space Designer built in, which works great with Recabinet. As far as a free AU. Reverberate 2. Reverberate 2 is a modulated true-stereo hybrid convolution reverb audio processor for Mac and PC. Using a unique mix of advanced signal processing technology it creates a unique rich and dynamic reverb with unrivalled sonic capabilities.

Enhancing images can be particularly useful when dealing with scientific images. Furthermore, since scientific images are often large, using these vImage operations can become necessary to achieve suitable application performance. The kinds of operations that you’d tend to use this on are edge detection, sharpening, surface contour outlining, smoothing, and motion detection. This chapter describes convolution and shows how to use the convolution functions provided by vImage. By reading this chapter, you’ll:.

See the sorts of effects you can get with convolution. Learn what a kernel is and how to construct one.

Get an introduction to commonly used kernels and high-speed variations. Find out, through code examples, how to apply vImage convolutions functions to an image Convolution Kernels Figure 2-1 shows an image before and after processing with a vImage convolution function that causes the emboss effect. To achieve this effect, vImage performs convolution using a grid-like mathematical construct called a kernel. Figure 2-1 Emboss using convolution Figure 2-2 represents a 3 x 3 kernel. The height and width of the kernel do not have to be same, though they must both be odd numbers. The numbers inside the kernel are what impact the overall effect of convolution (in this case, the kernel encodes the emboss effect).

The kernel (or more specifically, the values held within the kernel) is what determines how to transform the pixels from the original image into the pixels of the processed image. It may seem non-intuitive how the nine numbers in this kernel can yield an effect such as the previous emboss example. Convolution is a series of operations that alter pixel intensities depending on the intensities of neighboring pixels. VImage handles all the convolution operations based on the kernel that you supply.

The kernel provides the actual numbers that are used in those operations (see for the steps involved in convolution). Using kernels to perform convolutions is known as kernel convolution. Figure 2-2 3 x 3 kernel Convolutions are per-pixel operations—the same arithmetic is repeated for every pixel in the image.

Bigger images therefore require more convolution arithmetic than the same operation on a smaller image. A kernel can be thought of as a two-dimensional grid of numbers that passes over each pixel of an image in sequence, performing calculations along the way. Since images can also be thought of as two-dimensional grids of numbers (or pixel intensities—see Figure 2-3), applying a kernel to an image can be visualized as a small grid (the kernel) moving across a substantially larger grid (the image). Figure 2-3 An image is a grid of numbers The numbers in the kernel represent the amount by which to multiply the number underneath it. The number underneath represents the intensity of the pixel over which the kernel element is hovering. During convolution, the center of the kernel passes over each pixel in the image.

The process multiplies each number in the kernel by the pixel intensity value directly underneath it. This should result in as many products as there are numbers in the kernel (per pixel).

Temporal Convolution Machines For Sequence Learning

The final step of the process sums all of the products together, divides them by the amount of numbers in the kernel, and this value becomes the new intensity of the pixel that was directly under the center of the kernel. Figure 2-4 shows how a kernel operates on one pixel. Figure 2-4 Kernel convolution Even though the kernel overlaps several different pixels (or in some cases, no pixels at all), the only pixel that it ultimately changes is the source pixel underneath the center element of the kernel.

The sum of all the multiplications between the kernel and image is called the weighted sum. To ensure that the processed image is not noticeably more saturated than the original, vImage gives you the opportunity to specify a divisor by which to divide the weighted sum—a common practice in kernel convolution. Since replacing a pixel with the weighted sum of its neighboring pixels can frequently result in a much larger pixel intensity (and a brighter overall image), dividing the weighted sum can scale back the intensity of the effect and ensure that the initial brightness of the image is maintained. This procedure is called normalization.

The optionally divided weighted sum is what becomes the value of the center pixel. The kernel repeats this procedure for each pixel in the source image. Note: To perform normalization, you must pass a divisor to the convolution function that you are using. Divisors that are an exact power of two may perform better in some cases. You can supply a divisor only when the image’s pixel type is int. Floating-point convolutions do not make use of divisors. You can directly scale the floating-point values in the kernel to achieve the same normalization.

The data type used to represent the values in the kernel must match the data used to represent the pixel values in the image. For example, if the pixel type is float, then the values in the kernel must also be float values. Keep in mind that vImage does all of this arithmetic for you, so it is not necessary to memorize the steps involved in convolution to be able to use the framework. It is a good idea to have an idea of what is going on so that you can tweak and experiment with your own kernels. Deconvolution Deconvolution is an operation that approximately undoes a previous convolution—typically a convolution that is physical in origin, such as diffraction effects in a lens. Usually, deconvolution is a sharpening operation.

There are many deconvolution algorithms; the one vImage uses is called the Richardson-Lucy deconvolution. The goal of Richardson-Lucy deconvolution is to find the original value of a pixel given the post-convolution pixel intensity and the number by which it was multiplied (the kernel value).

116; Keyboards & Mouse > Keyboards > ZM-K700M ZM-K700M_ZKeyFormation_Setup_1.0.0.3(Keyboard Custom software); Firmware. Zalman presents its new high precision, low cost, 7 button gaming mouse, the ZM-M300. Sensor, Optical. Interface, USB. Dimensions, 132 x 65 x 42 mm. Cable Length, 1.5m. Number of Keys, 7. Highest Resolution, 2,500DPI. Zalman zm-m300 driver for mac.

Due to these requirements, to use vImage’s deconvolution functions you must provide the convolved image and the kernel used to perform the original convolution. Represented as k in the following equation, the kernel serves as a way of letting vImage know what type of convolution it should undo. For example, sharpening an image (a common use of deconvolution) can be thought of as doing the opposite of a blur convolution.

If the kernel you supply is not symmetrical, you must pass a second kernel to the function that is the same as the first with the axes interchanged. Richardson-Lucy deconvolution is an iterative operation; your application specifies the number of iterations desired. The more iterations you make, the greater the sharpening effect (and the time consumed). As with any sharpening operation, Richardson-Lucy amplifies noise, and at some number of iterations the noise becomes noticeable as artifacts. Figure 2-5 shows the Richardson-Lucy algorithm expressed mathematically. Figure 2-5 Richardson-Lucy deconvolution equation In this equation:. I is the starting image.

Convolution Formula Probability

e i is the current result and e i + 1 is being calculated; e 0 is 0. k 0 represents the kernel.

k 1 is a second kernel to be used if k0 is asymmetrical. If k0 is symmetrical, k0 is used as k1.

The. operator indicates convolution. Operator indicates multiplication of each element in one matrix by the corresponding element in the other matrix. As with convolution, vImage handles all the individual steps of deconvolution, thus it is not necessary to memorize the steps involved. When deconvolving, all you must provide is the original convolution kernel (plus an additional inverted convolution kernel if the original kernel is not symmetrical). Using Convolution Kernels Now that you better understand the structure of kernels and the process behind convolution, it’s time to actually use a few vImage convolution functions. This section shows you how to perform the emboss shown in, and also explains the differences between convolving with and without bias.

Convolving vImage takes care of the specific convolution operations for you. Your job is to provide the kernel, or in other words, describe the effect that convolution should produce. Listing 2-1 shows how to use convolution to produce an emboss effect. To illustrate this effect, convolving the leftmost image in produces the embossed image on the right. You could use the same code to produce a different effect, such as sharpening, by specifying the appropriate kernel. Listing 2-1 Producing an emboss effect.

Note: vImage does not provide floating-point versions of these functions because the constant cost algorithm relies on exact arithmetic to be robust. Rounding differences in the floating-point computation can leave artifacts in the image in low intensity areas near high intensity areas. Box filters blur images by replacing each pixel in an image with the un-weighted average of its surrounding pixels. The operation is equivalent to applying a convolution kernel filled with all 1s. The box functions available in vImage are. Each resulting pixel is the mean of surrounding pixels as defined by the kernel height and width. Figure 2-9 Box filter You use tent filters to blur each pixel of an image with the weighted average of its surrounding pixels.

The tent functions available in vImage are. These blur operations are equivalent to applying a convolution kernel filled with values that decrease with distance from the center. As with and, you do not need to pass any kernel to the function — just the height and width. Specifically, for an M x N kernel size, the kernel would be the product of an M x 1 column matrix and a 1 x N row matrix. Each would have 1 as the first element, then values increasing by 1 up to the middle element, then decreasing by 1 to the last element. Figure 2-10 Tent filter For example, suppose the kernel size is 3 x 5. Then the first matrix is and the second is The product is The 3 x 5 tent filter operation is equivalent to convolution with the above matrix.

Using Multiple Kernels vImage allows you to apply multiple kernels in a single convolution. The vImageConvolveMultiKernel functions allow for you to specify four separate kernels—one for each channel in the image. This allows for a greater level of control when applying filters to an image since you can operate on the red, green, blue, and alpha channels individually. For example, you can use multikernel convolutions to resample the color channels of an image differently to compensate for the positioning of RGB phosphors on the screen. Since each of the four kernels can operate on a single channel, the vImageConvolveMultiKernel functions are available only for interleaved image formats.

The use of these functions is identical to convolution with a single kernel, except that you must supply an array of pointers to the four kernels instead of one single kernel. Deconvolving As with the operations behind convolution, vImage takes care of performing the previously mentioned Richardson-Lucy algorithm for you. Your job is to provide the kernel (referred to as the point spread function) of the initial convolution. Listing 2-2 shows how to deconvole an emboss effect. You could use the same code to deconvolve various effects, such as blurring, by specifying the appropriate kernel. Note that unlike the convolution operations, there is an additional kernel parameter. This parameter can be NULL unless the height and width of the kernel are not the same size.

If the kernel height and width are unequal, you must supply an identical kernel, but with its rows and columns inverted. Listing 2-2 is an example of how to use vImage to deconvolve an ARGB8888-formatted image. Listing 2-2 Deconvolving an embossed image.

Hi, I'm glad that you find my software usefull. That some hosts crash when the plugin is unloaded is a known issue and I have to apologize that it's not yet fixed. Writing HybridReverb2 was really fun, but I have to focus on finishing my doctoral thesis now.

As I'm using it myself for playing piano, I will further develop/support it, but not in the near future as I won't have the time for it. New versions will be announced in this KVR thread: If someone else wants to do that, go ahead! The source code is available at. Ciao, Christian P.S.: One of the first things which I bought from my DC'09 cash prize was a Reaper license.

I'm glad that you find my software usefull. Of the first things which I bought from my DC'09 cash prize was a Reaper license. Christian, thanks for this plugin and congratulations on your prize at KVR. Those IR's you've made are very clean and the plug is so tweakable.this going to be useful. Also, I found the GUI especially well done, fun and easy to use.

Kinda wish you could make a little money on it so you'll be motivated to maintain it! So much of the software out of academia is unuseable - never gets finished or tested etc. (I really wish they had a Google 'Summer of QA' for some of these projects, like CLAM.).

FYI, Hybrid2 works fine in Bidule, both standalone and hosted within an AU or VST Bidule in Reaper; no crashes on exit. Workaround for those that have Bidule. Hi guys, Thanks for the heads up about this convolution reverb. I used it with some M7 Bricassti 'large and Near' IR's I downloaded a while ago. I used one of the built in studio reverbs for the 'close' parts.

The quality is quite amazing. Only problem is it won't save the settings with the reaper session. So you need to save as an.XML file externally and load it each time you open the session. (can't seem to get it to save presets in his list) Here is a piece that I added the reverbs to: The instruments are all Garritan GPO4 vsti.